📝 Publications

A full publication list is available on my Google Scholar page.

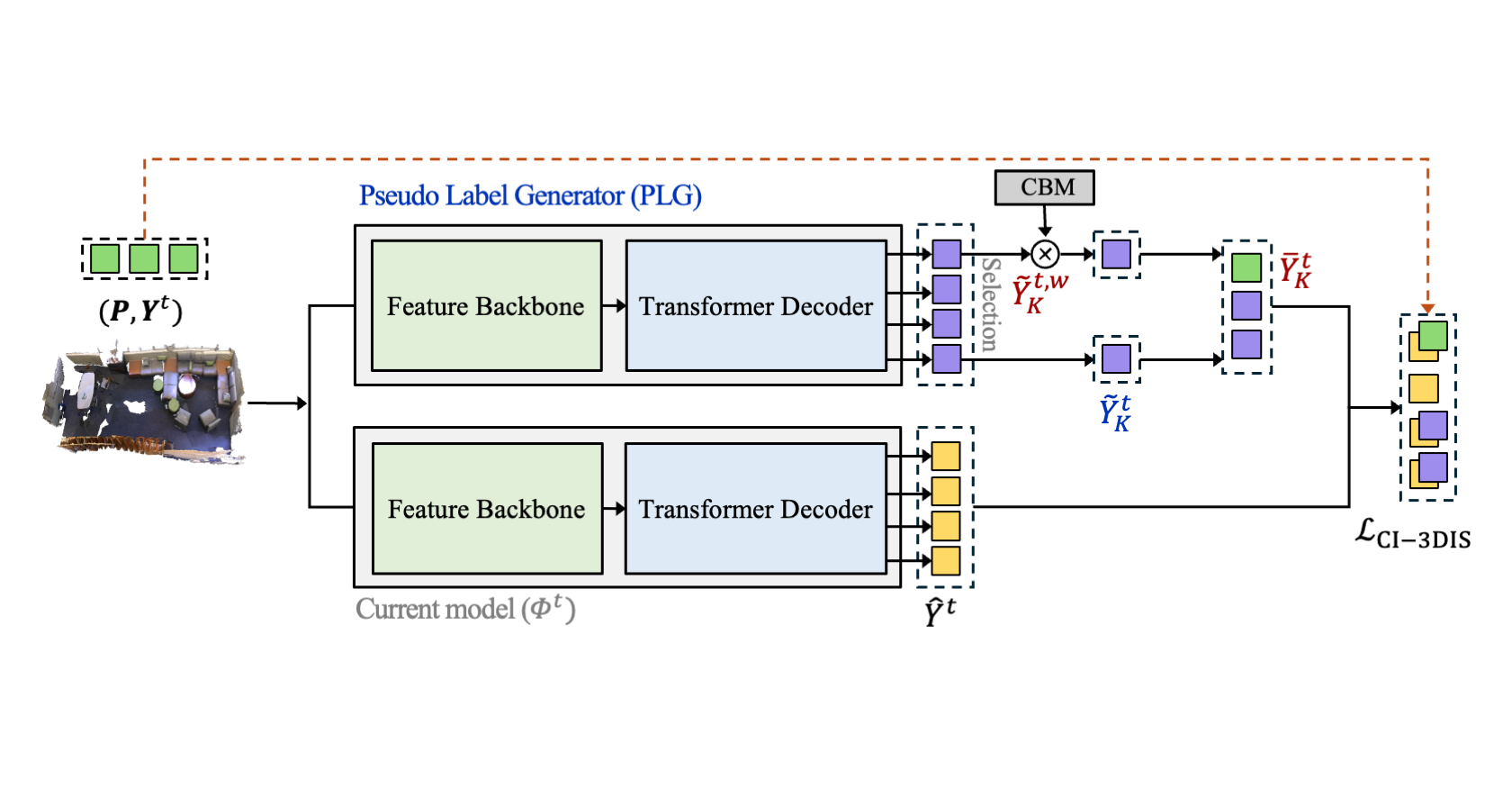

CLIMB-3D: Continual Learning for Imbalanced 3D Instance Segmentation

Vishal Thengane, Jean Lahoud, Hisham Cholakkal, Rao Muhammad Anwer, Lu Yin, Xiatian Zhu, Salman Khan

We introduce class-incremental learning for point cloud instance segmentation and curate benchmarks from the long-tail ScanNet200 dataset. To address class imbalance, we propose a novel module that ensures more uniform performance across frequent and rare classes.

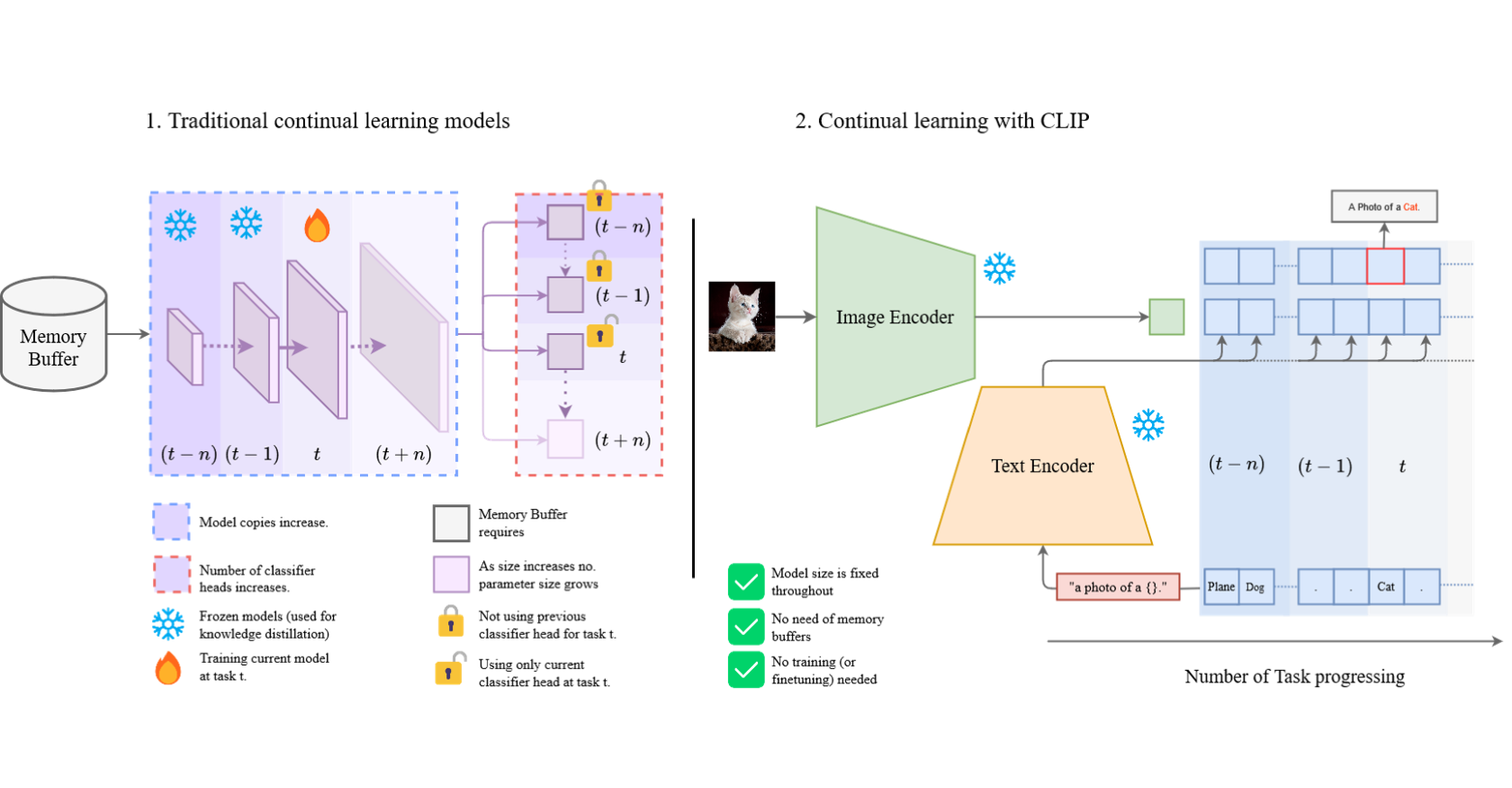

CLIP Model is an Efficient Continual Learner

Vishal Thengane, Salman Khan, Munawar Hayat, Fahad Khan

This work demonstrates that a frozen CLIP model, evaluated in zero-shot mode, achieves SOTA performance across multiple continual learning settings without any fine-tuning. Tested on five benchmarks, CLIP surpasses existing methods while avoiding re-training, memory replay, or architectural tweaks, making it a strong and surprisingly simple baseline for future CL research.

Strong Gravitational Lensing Parameter Estimation with Vision Transformer

Kuan-Wei Huang, Geoff Chih-Fan Chen, Po-Wen Chang, Sheng-Chieh Lin, Chia-Jung Hsu, Vishal Thengane, Joshua Yao-Yu Lin

We explore Vision Transformers (ViTs) for estimating parameters in simulated lensed quasar systems—offering a fast, competitive alternative to MCMC and CNNs. ViTs perform well on mass-related lensing parameters, showing promise for future lensing analyses.

Pre-prints

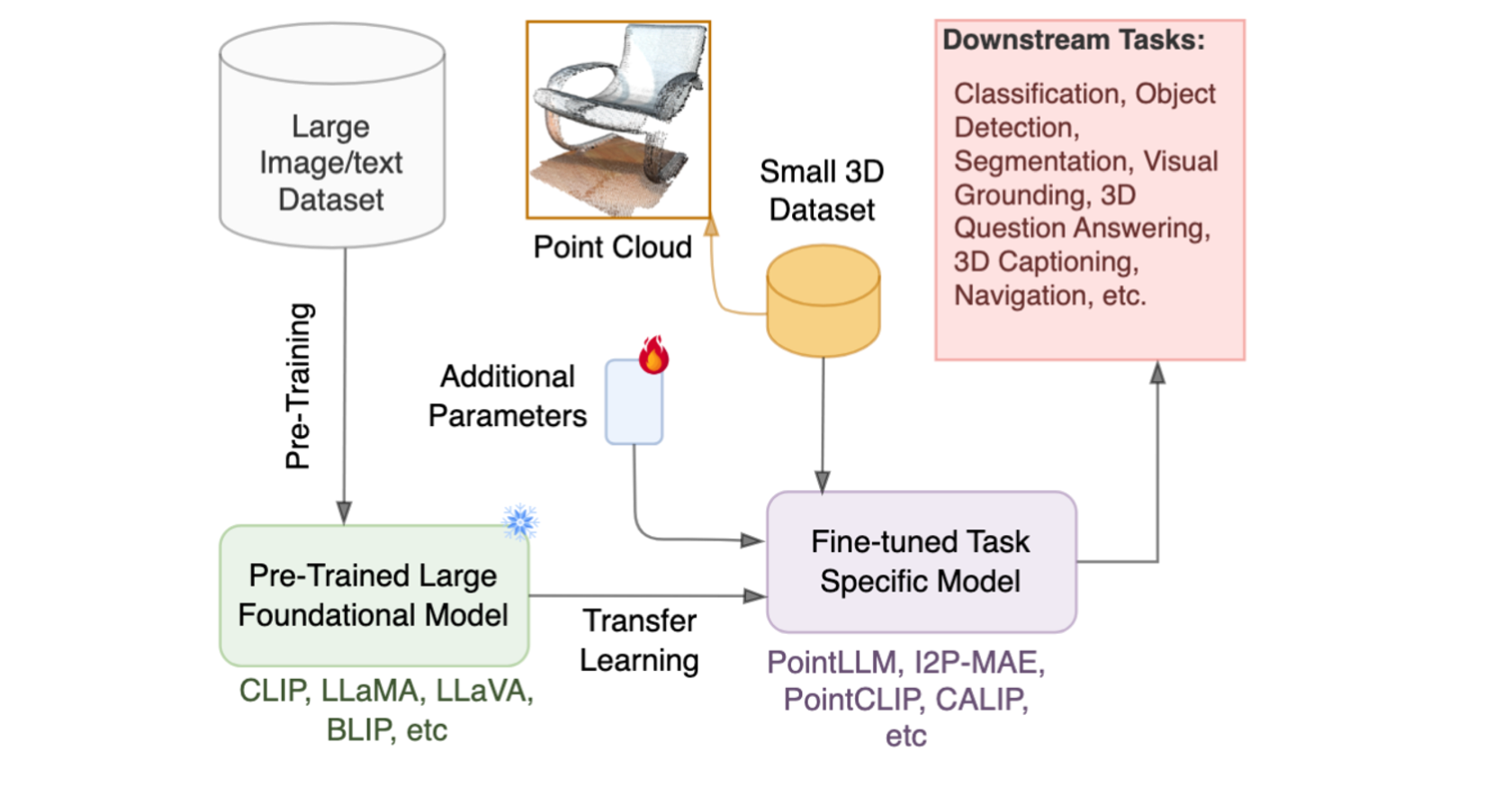

Foundational Models for 3D Point Clouds: A Survey and Outlook

Vishal Thengane, Xiatian Zhu, Salim Bouzerdoum, Son Lam Phung, Yunpeng Li

This paper surveys recent advances in foundation models for 3D point cloud understanding, focusing on how 2D and language-based pretrained models help overcome challenges like limited labelled data and high computational costs. It reviews strategies for building 3D FMs, their application across core 3D tasks, and highlights future research directions.

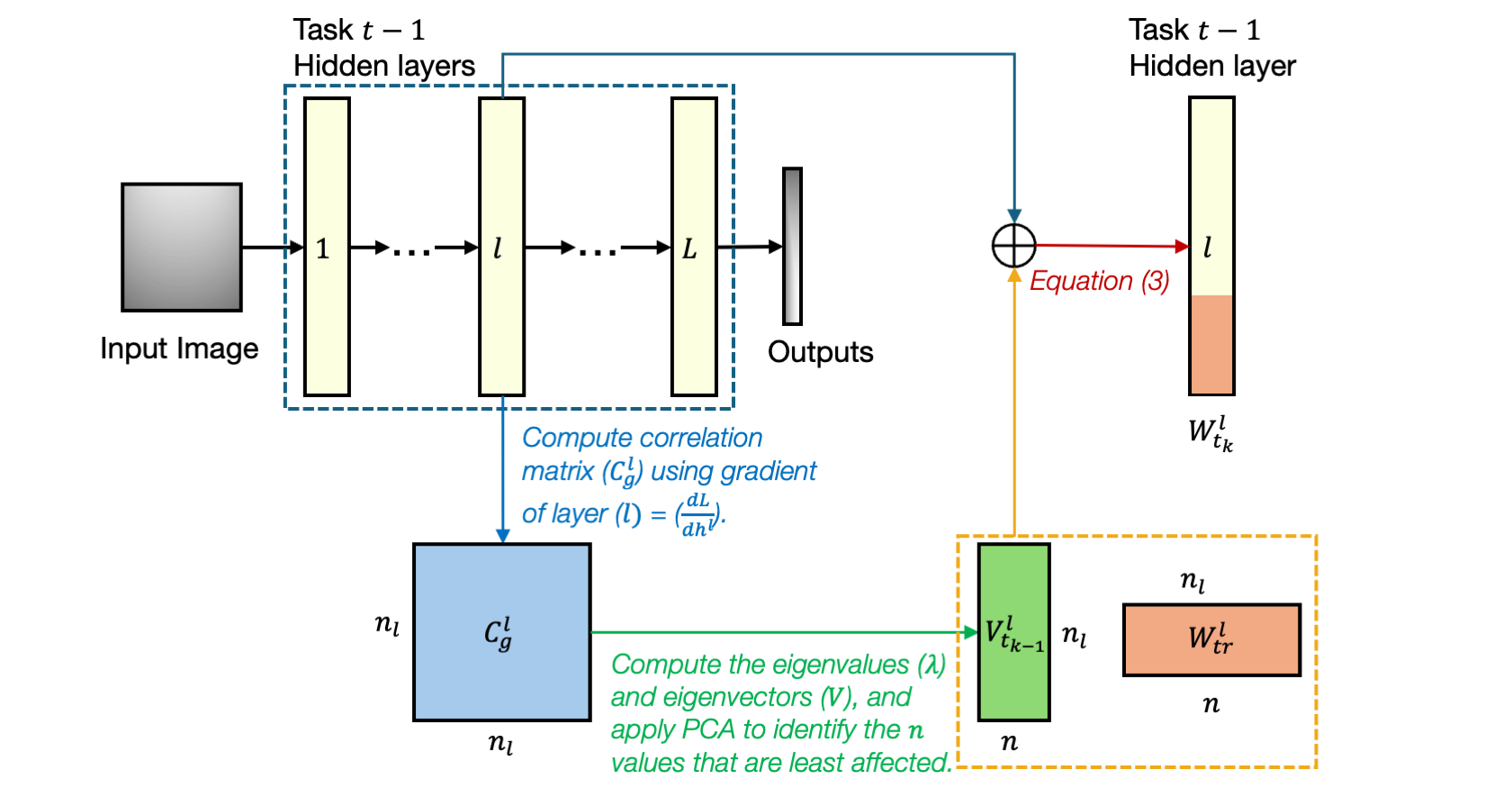

Gradient Correlation Subspace Learning against Catastrophic Forgetting

Vishal Thengane, Tammuz Dubnov

This paper proposes Gradient Correlation Subspace Learning (GCSL) to address catastrophic forgetting in incremental class learning. GCSL identifies and preserves weight subspaces least affected by prior tasks, projecting new task updates into them, and can be flexibly applied across network layers and tasks.